Mobile App Development, SEO, Social Media, Uncategorized, Web Development, Website Design

Twitter’s Testing More Options to Help Users Avoid Negative Interactions in the App

- By Brett Belau

25 Sep

Abuse has always been a problem on Twitter, with the platform’s toxicity a source of constant debate, and accusation, pretty much ever since its inception.

But Twitter has been working to address this. After years of seemingly limited action, over the past 12 months, Twitter has rolled out a range of new control options, including reply controls to limit unwelcome commenters, warnings on potentially harmful and/or offensive replies, and Safety Mode which alerts users when their tweets are getting negative attention.

Collectively, these new features could have a big impact – and Twitter’s not done yet. This week, Twitter has previewed a few more new control options which could help users avoid negative interactions, and the mental stress that can come with them, when your tweets become the focus for abuse.

First off, Twitter’s developing new ‘Filter’ and ‘Limit’ options, which, as Twitter notes, would be designed to help users keep potentially harmful content – and the people who create it – out of their replies.

As you can see here, the new option would enable you to automatically filter out replies which contain potentially offensive remarks, or from users who repeatedly tweet at you that you never engage with. You could also block these same accounts from ever replying to your tweets in future.

But even more significant, the Filter option would also mean that any replies that you choose to hide would not be visible to anyone else in the app either, except the person who tweeted them, which is similar to Facebook’s ‘Hide’ option for post comments.

That’s a significant change in approach. Up till now, Twitter has enabled users to hide content from their own view in the app, but others are still be able to see it. The Filter control would up the power of individual users to totally hide such comments – which makes sense, in that they’re replies to your tweets. But you can also imagine that it could be misused by politicians or brands who want to shut down negative mentions.

That’s probably a more important consideration on Twitter, where the real-time nature of the app invites response and interaction, and in some cases, challenges to what people are saying, especially around topical or newsworthy issues. If people can then shut down that discussion, that could have its own potential impacts – but then again, the original tweet would still be there for reference, and users would theoretically still be able to quote tweet whatever they wanted.

And really, with reply controls already present in the app, it’s probably not a huge stretch, and it may well enable users to get rid of some of the trolls and creeps that lurk in their replies, which could improve overall engagement in the app.

In addition to this, Twitter’s also developing a new ‘Heads Up’ alert prompt, which would warn users about potentially divisive comment sections before they dive in.

That could save you from misstepping into a quagmire of toxicity, and unwittingly becoming a focus for abuse. As you can see in the second screenshot, the prompt would also call on users to be more considerate in their tweeting process.

I don’t suspect that would have a big impact on user behavior, but it could help to at least prompt more consideration in the process.

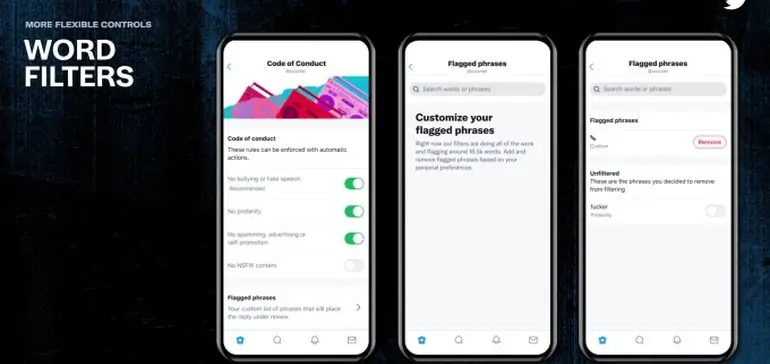

Twitter’s also developing new ‘Word Filters’, which is an extension of its existing keyword blocking tools, and would rely on Twitter’s automated detection systems to filter out more potentially offensive comments.

As you can see here, the option would include separate toggles to automatically filter out hate speech, spam and profanity, based on Twitter’s system detection, providing another means to limit unwanted exposure in the app.

These seem like helpful additions, and while there are always concerns that people will use such tools essentially as blinders to block out whatever they don’t want to deal with, which could limit helpful discourse, and important perspective, if that’s what gives people a better in-app experience, why shouldn’t they be able to do that?

Of course, the ideal would be enlightened intelligent debate on all issues, where people remain civil and respectful at all times. But this is Twitter, and that’s never going to happen. As such, providing more control options could be the best way forward, and it’s good to see Twitter taking more steps to address these key elements.

Source: www.socialmediatoday.com, originally published on 2021-09-24 15:48:30

Connect with B2 Web Studios

Get B2 news, tips and the latest trends on web, mobile and digital marketing

- Appleton/Green Bay (HQ): (920) 358-0305

- Las Vegas, NV (Satellite): (702) 659-7809

- Email Us: [email protected]

© Copyright 2002 – 2022 B2 Web Studios, a division of B2 Computing LLC. All rights reserved. All logos trademarks of their respective owners. Privacy Policy

![How to Successfully Use Social Media: A Small Business Guide for Beginners [Infographic]](https://b2webstudios.com/storage/2023/02/How-to-Successfully-Use-Social-Media-A-Small-Business-Guide-85x70.jpg)

![How to Successfully Use Social Media: A Small Business Guide for Beginners [Infographic]](https://b2webstudios.com/storage/2023/02/How-to-Successfully-Use-Social-Media-A-Small-Business-Guide-300x169.jpg)

Recent Comments