Mobile App Development, SEO, Social Media, Uncategorized, Web Development, Website Design

Facebook Expands Climate Science Center to More Regions, Ramps Up Climate Misinformation Detection

- By Brett Belau

01 Nov

Facebook is taking stronger action to promote climate science, and tackle related misinformation on its platforms, as part of a renewed push for a broader, more inclusive global effort to combat the growing climate crisis.

As explained by Facebook:

“Climate change is the greatest threat we all face – and the need to act grows more urgent every day. The science is clear and unambiguous. As world leaders, advocates, environmental groups and others meet in Glasgow this week at COP26, we want to see bold action agreed to, with the strongest possible commitments to achieve net zero targets that help limit warming to 1.5˚C.”

Facebook has been repeatedly identified as a key source of climate misinformation, and it clearly does play some role in this respect. But with this renewed stance, the company’s looking to set clear parameters around what’s acceptable, and what it’s looking to take action on, to play its part in the broader push.

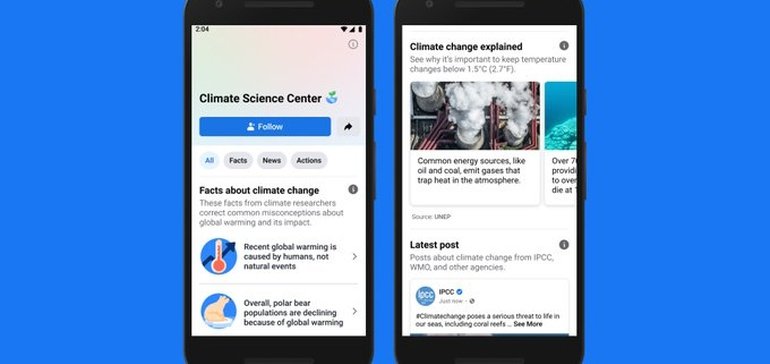

First off, Facebook is expanding its Climate Change Science Center to more than 100 countries, while it’s also adding a new section that will display each nation’s greenhouse gas emissions, in comparison to their commitments and targets.

Facebook first launched its climate change science center in September last year, in order to help connect users with more accurate climate information. The data powering the updates included in the Center is sourced directly from leading information providers in the space, including the Intergovernmental Panel on Climate Change, the UN Environment Program and more

The additional target tracking data for each nation will provide an extra level of accountability, which could increase the pressure on each region to meet their commitments through broader coverage and awareness of their progress.

Facebook’s also expanding its informational labels on posts about climate change, which direct users to the Climate Science Center to find out more information on related issues and updates.

Facebook’s also taking more action to combat climate misinformation during the COP26 climate summit specifically:

“Ahead of COP26, we’ve activated a feature we use during critical public events to utilize keyword detection so related content is easier for fact-checkers to find — because speed is especially important during such events. This feature is available to fact-checkers for content in English, Spanish, Portuguese, Indonesian, German, French and Dutch.”

I mean, that does beg the question as to why they wouldn’t use this process all the time, but the assumption is that this is a more labor-intensive approach, which is only feasible in short bursts.

By combating such claims as they ramp up (Facebook also notes that climate misinformation ‘spikes periodically when the conversation about climate change is elevated’), that should help to lessen the impact of such, and negate some of the network effects of Facebook’s scale, in regards to amplification.

Finally, Facebook also says that it’s working to improve its own internal operations and processes in line with emissions targets.

“Starting last year, we achieved net zero emissions for our global operations, and we’re supported by 100% renewable energy. To achieve this we’ve reduced our greenhouse gas emissions by 94% since 2017. We invest enough in wind and solar energy to cover all our operations. And for the remaining emissions, we support projects that remove emissions from the atmosphere.”

The next step for its operations will be to partner with suppliers who are also aiming for net zero, which will offset its business impacts entirely once fully in effect.

Facebook’s record on this front is spotty, not because of its own initiatives or endeavor, as such, but because of the way that controversial content can be amplified by the News Feed algorithm, which, inadvertently, provides an incentive for users to share more left-of-center, controversial, and anti-mainstream viewpoints, in order to get attention, and spark engagement in the app.

Which is a big problem with Facebook’s systems, and one that Facebook itself has repeatedly pointed to, albeit indirectly. Part of the reason this type of content sees increased attention on the platform is not because of Facebook itself, but is actually due to human nature, and people being able to share and engage with topics that resonate with them. Facebook says that this is a people problem, not a Facebook one.

As Facebook’s Nick Clegg recently explained in regards to a similar topic, in broader political division:

“The increase in political polarization in the US pre-dates social media by several decades. If it were true that Facebook is the chief cause of polarization, we would expect to see it going up wherever Facebook is popular. It isn’t. In fact, polarization has gone down in a number of countries with high social media use at the same time that it has risen in the US.”

So it’s not Facebook that’s the issue, as per the evidence Clegg cites, but the fact that people now have more ways to discuss and engage with such can play a part in making it seem like Facebook is playing a bigger part.

But that lets Facebook off the hook a bit. A key problem is the incentive that Facebook has built-in, in terms of Likes and comments, and the dopamine rush that people get from such. That gives people a reason to share more controversial content, because that sparks more notifications, and boosts their presence – so there is, inherently, a process on Facebook that drives this type of behavior, whether Facebook itself wants to acknowledge such or not.

Which is why it’s important that Facebook does take action – but the real question is, how effective will, or even can such countermeasures be, especially at Facebook’s scale?

Source: www.socialmediatoday.com, originally published on 2021-11-01 19:45:43

Connect with B2 Web Studios

Get B2 news, tips and the latest trends on web, mobile and digital marketing

- Appleton/Green Bay (HQ): (920) 358-0305

- Las Vegas, NV (Satellite): (702) 659-7809

- Email Us: [email protected]

© Copyright 2002 – 2022 B2 Web Studios, a division of B2 Computing LLC. All rights reserved. All logos trademarks of their respective owners. Privacy Policy

![How to Successfully Use Social Media: A Small Business Guide for Beginners [Infographic]](https://b2webstudios.com/storage/2023/02/How-to-Successfully-Use-Social-Media-A-Small-Business-Guide-85x70.jpg)

![How to Successfully Use Social Media: A Small Business Guide for Beginners [Infographic]](https://b2webstudios.com/storage/2023/02/How-to-Successfully-Use-Social-Media-A-Small-Business-Guide-300x169.jpg)

Recent Comments