Social Media

Meta Shares its Latest Advances in Automated Object Identification, a Key Development in its AR Push

- By Brett Belau

28 Feb

Meta has outlined its latest advances in automated object identification within images, with its updated SEER system now, according to Meta, the largest and most advanced computer vision model available.

SEER – which is a derivative of ‘self-supervised’ – is able to learn from any random group of images on the internet, without the need for manual curation and labeling, which accelerates its capacity to identify a wide array of different objects within a frame, and it’s now able to outperform the leading industry standard computer vision systems in terms of accuracy.

And it’s only getting better. The original version of SEER, which was initially announced by Meta last year, was built on a model of over 1 billion images. This new version is now 10x the scope.

As explained by Meta:

“When we first announced SEER last spring, it outperformed state-of-the-art systems, demonstrating that self-supervised learning can excel at computer vision tasks in real world settings. We’ve now scaled SEER from 1 billion to 10 billion dense parameters, making it to our knowledge the largest dense computer vision model of its kind.”

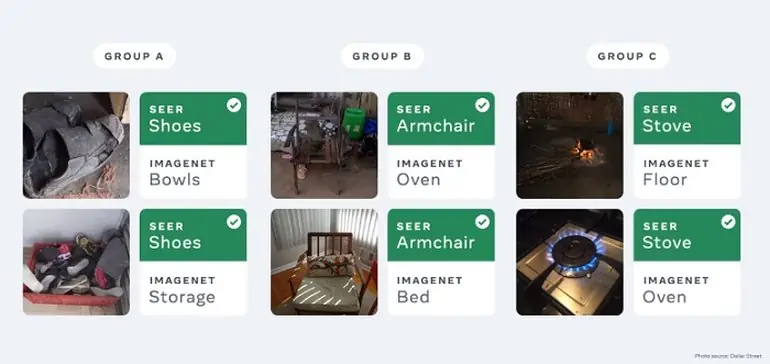

Of particular note is the system’s capacity to identify different images of different people and cultures, while it’s also able to assign meaning and interpretation to objects from varying global regions.

“Traditional computer vision systems are trained primarily on examples from the U.S. and wealthy countries in Europe, so they often don’t work well for images from other places with different socioeconomic characteristics. But SEER delivers strong results for images from all around the globe – including non-U.S. and non-Europe regions with a wide range of income levels.”

That’s significant, because it’ll expand the system’s understanding of different objects and uses, which can then help to improve accuracy, and provide better automated descriptions of what’s in a frame. That can then provide more context for visually impaired users, along with product identification matching, signage signals, branding alerts, etc.

Meta also notes that the system is a key component of its next shift.

“Advancing computer vision is an important part of building the Metaverse. For example, to build AR glasses that can guide you to your misplaced keys or show you how to make a favorite recipe, we will need machines that understand the visual world as people do. They will need to work well in kitchens not just in Kansas and Kyoto but also in Kuala Lumpur, Kinshasa, and myriad other places around the world. This means recognizing all the different variations of everyday objects like house keys or stoves or spices. SEER breaks new ground in achieving this robust performance.”

Meta’s been working on improved object identification for years, and has made significant advances in terms of automated captions, reader descriptions and more.

It’s also working on identifying objects within video, the next stage. And while that’s not a viable option as yet, it could, eventually, lead to all new data insights, by enabling you to learn more about what each individual user posts about, and how to reach them with your promotions.

Even right now, this can be valuable. If you knew, for example, that a certain subset of users on Instagram were more likely to post a picture of their meal, based on previous posting patterns, that could help in your ad targeting. Extrapolate that to any subject, with a high degree of accuracy in data matching, and that could be a great way to generate maximum value from your ad approach.

And that’s before, as Meta notes, considering the advanced applications in AR overlays, or in improving its video algorithms to show people more of the content they’re more likely to engage with, based on what’s actually in each frame.

The next stage is coming, and systems like this will underpin major shifts in online connectivity.

You can read more about Meta’s SEER system here.

Source: www.socialmediatoday.com, originally published on 2022-02-28 17:08:09

Connect with B2 Web Studios

Get B2 news, tips and the latest trends on web, mobile and digital marketing

- Appleton/Green Bay (HQ): (920) 358-0305

- Las Vegas, NV (Satellite): (702) 659-7809

- Email Us: [email protected]

© Copyright 2002 – 2022 B2 Web Studios, a division of B2 Computing LLC. All rights reserved. All logos trademarks of their respective owners. Privacy Policy

![How to Successfully Use Social Media: A Small Business Guide for Beginners [Infographic]](https://b2webstudios.com/storage/2023/02/How-to-Successfully-Use-Social-Media-A-Small-Business-Guide-85x70.jpg)

![How to Successfully Use Social Media: A Small Business Guide for Beginners [Infographic]](https://b2webstudios.com/storage/2023/02/How-to-Successfully-Use-Social-Media-A-Small-Business-Guide-300x169.jpg)

Recent Comments