Mobile App Development, SEO, Social Media, Uncategorized, Web Development, Website Design

Facebook Adds New Alerts and Individual User Penalties to Help Stop the Spread of Misinformation

- By Brett Belau

26 May

Facebook is taking another step in its renewed push to reduce the spread of misinformation via its apps, this time through the addition of new informational alerts, and increased individual user penalties, based on the distribution of content that has been flagged as false by fact-checking teams.

Which could be a major step in reducing the overall amplification of such online.

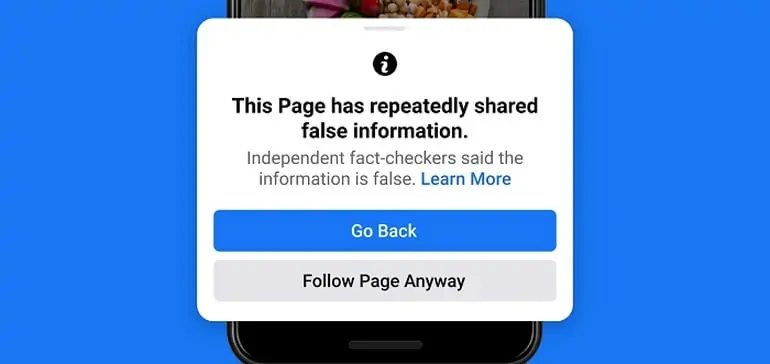

First off, Facebook is launching a new pop-up to alert visitors to Facebook Pages that have been repeatedly found to be sharing false information, as rated by Facebook fact-checkers.

As you can see here, the new pop-up will make it clear that this Page has been found to be sharing questionable content in the past, which will likely see visitors view their updates with a more skeptical eye. Or, it’ll reinforce the beliefs of users who are opposed to Facebook fact-checking at all.

As explained by Facebook:

“We want to give people more information before they like a Page that has repeatedly shared content that fact-checkers have rated, so you’ll see a pop up if you go to like one of these Pages. You can also click to learn more, including that fact-checkers said some posts shared by this Page include false information and a link to more information about our fact-checking program. This will help people make an informed decision about whether they want to follow the Page.”

It could be a good way to increase awareness, and reduce sharing of misinformation on the network – and while some will straight up oppose Facebook being more overt in these alerts, hopefully the broader majority of users will have more influence in subsequent engagement trends, which will see a reduction in the sharing of such.

In addition to this, Facebook is also upping the penalties for people found to be distributing misinformation.

“Starting today, we will reduce the distribution of all posts in News Feed from an individual’s Facebook account if they repeatedly share content that has been rated by one of our fact-checking partners. We already reduce a single post’s reach in News Feed if it has been debunked.”

This one will definitely stir up the Facebook conspiracy theorists, and those who believe the platform has no right to stop them sharing whatever they like on the platform. Now, their Facebook and Instagram networks will simply stop seeing their posts if they keep sharing debunked conspiracy theories and misinformed reports. Which they’ll see as censorship, reinforcing their views about ‘big brother’ seeking to control the flow of information.

But Facebook is right to act. The company works with a range of fact-checkers for this very reason, to ensure that false information is being flagged, and removed where possible, in order to reduce its spread, and stop the use of Facebook’s network to amplify such narratives.

This has become much more pressing of late, with conspiracies around the COVID-19 vaccine impeding optimal take-up, and slowing broader recovery efforts, while the Capitol Riots earlier this year, which were sparked by the false narrative that the US election was ‘stolen’, despite all investigations finding no significant wrongdoing, further underlined the major impact that such misinformation pushes can have. And with Facebook operating the largest interconnected network of humans in history, it’s in a unique position to facilitate the spread of such on a broad scale, which is why it’s important that the platform takes action, and looks to disrupt the flow of such when misinformation is identified.

Which is an important note – the posts and people that will be impacted by these changes are not just random folk who’ve shared a personal opinion on, say, their political beliefs. The content that will be flagged relates to reports and claims that have been proven false by experts, and double-checked by third-party fact-checkers to assess the full claims.

Some users will look to twist this, and claim that Facebook’s impeding on free speech, but Facebook has a right, and even an obligation, to ensure that its network is not being used to amplify dangerous counter-narratives. It’s difficult to argue that these conspiracy theories haven’t become more influential in recent times, and Facebook has likely played a part in this – which is why it’s important for the company to take more action, where possible, to reduce the spread.

Finally, Facebook is also launching new notifications to better outline when content that users have reported has then been fact-checked.

“We currently notify people when they share content that a fact-checker later rates, and now we’ve redesigned these notifications to make it easier to understand when this happens. The notification includes the fact-checker’s article debunking the claim as well as a prompt to share the article with their followers. It also includes a notice that people who repeatedly share false information may have their posts moved lower in News Feed so other people are less likely to see them.”

The measure is another step in increasing transparency in the process, and better outlining the specific actions taken in each case.

Again, this is an important update from Facebook, and depending on just how significant the subsequent reach restrictions and content impacts are, it could have a major impact in slowing the spread of misinformation, not just on Facebook, but online more broadly.

According to Pew Research, around 71% of people now get at least some of their news input from social media platforms, with Facebook leading the way, which means that there’s significant potential for the platform to influence opinion through the sharing of perceived ‘facts’ among its audience.

Add to this the power of peer influence, in seeing content shared by those you love and respect, and Facebook is clearly the most powerful platform for exerting impact over what people believe.

For many years, Facebook mostly turned a blind eye to this, preferring to stay hands-off and let users share what they like, in the name of free speech. But now we know, very clearly, what the consequences of such can be.

Hopefully this is another step in the right direction on this front.

Source: www.socialmediatoday.com, originally published on 2021-05-26 14:32:26

Connect with B2 Web Studios

Get B2 news, tips and the latest trends on web, mobile and digital marketing

- Appleton/Green Bay (HQ): (920) 358-0305

- Las Vegas, NV (Satellite): (702) 659-7809

- Email Us: [email protected]

© Copyright 2002 – 2022 B2 Web Studios, a division of B2 Computing LLC. All rights reserved. All logos trademarks of their respective owners. Privacy Policy

![How to Successfully Use Social Media: A Small Business Guide for Beginners [Infographic]](https://b2webstudios.com/storage/2023/02/How-to-Successfully-Use-Social-Media-A-Small-Business-Guide-85x70.jpg)

![How to Successfully Use Social Media: A Small Business Guide for Beginners [Infographic]](https://b2webstudios.com/storage/2023/02/How-to-Successfully-Use-Social-Media-A-Small-Business-Guide-300x169.jpg)

Recent Comments